When Sarah applied for a small business loan through a fintech app, she was denied within minutes. Her payment history was flawless, her revenue solid, yet the algorithm rejected her.

The reason? A "black box" decision that the company couldn't explain. She later discovered that women entrepreneurs in her region received loans at half the rate of men, even with identical financial profiles.

Stories like Sarah's are becoming more common as artificial intelligence reshapes lending decisions worldwide. While AI-powered credit scoring promises to expand financial access to millions of people without traditional credit histories, it also carries a hidden cost: embedding gender bias into decisions that affect lives and livelihoods.

Understanding why this happens and how to fix it matters for fintech companies, regulators, and anyone seeking fair access to credit.

What Is AI-Based Credit Scoring and Why Does It Matter for Financial Inclusion

This section explains how modern credit decisions differ from traditional methods and why fairness is crucial for expanding access to financial services.

AI-based credit scoring systems use machine learning algorithms to evaluate creditworthiness based on alternative data sources. Instead of relying only on traditional credit histories, these systems analyze mobile phone usage patterns, transaction histories, payment behavior, utility bill payments, and other digital footprints.

In emerging markets and underserved communities, this approach has been transformative, enabling millions of people to access loans and financial services they were previously denied.

As financial institutions gain access to different types of data, the variety of credit scoring mechanisms has increased significantly, with algorithms and artificial intelligence helping institutions make decisions in a world with massive data.

In Africa, Asia, and Latin America, fintech lenders have used AI to bring financial inclusion to previously unbanked populations, creating real economic opportunity.

Mobile money platforms and alternative lending apps have reached over 500 million people globally who lack formal credit histories.

However, the promise of AI in lending comes with a critical challenge: these systems do not automatically produce fair outcomes.

Machine learning systems in the credit domain often consolidate existing bias and prejudice against groups defined by race, sex, sexual orientation, and other attributes, even when lenders do not intentionally discriminate.

The algorithms inherit the limitations and prejudices present in historical data and decision-making processes.

The Evidence: Do AI Credit Systems Create Gender Gaps in Lending

This section presents research findings on how gender bias shows up in AI lending and why it matters economically for millions of borrowers.

The research on AI credit scoring reveals a mixed but concerning picture. AI systems can amplify gender inequalities when trained on biased data, reinforcing discrimination against women and girls from hiring decisions to financial services.

While these systems do increase approval rates for some underserved populations, they do not automatically produce equitable outcomes between genders.

Racial, gender, or socio-economic disparities can manifest in credit approvals and interest rates, with one key challenge being the reliance on historical data that may reflect past discriminatory lending practices.

When algorithms learn from historical loan decisions, they absorb the prejudices embedded in those decisions.

For example, if historical data shows that women were approved for smaller loans, the algorithm learns to recommend smaller loan amounts for women applicants.

Real-world investigations have documented these disparities. The New York Department of Financial Services investigated complaints about an AI-powered credit card, finding that women applicants received lower credit limits than men with similar financial profiles.

High-profile cases like this reveal that "gender-blind" models still produce gendered outcomes because the features and proxy variables in data reflect real social inequalities.

Women in emerging markets face particular challenges. Many have limited traditional credit histories but strong digital payment behaviors.

If algorithms are not explicitly designed to recognize these patterns as signals of creditworthiness, women entrepreneurs and informal workers remain invisible to AI lending systems, perpetuating financial exclusion.

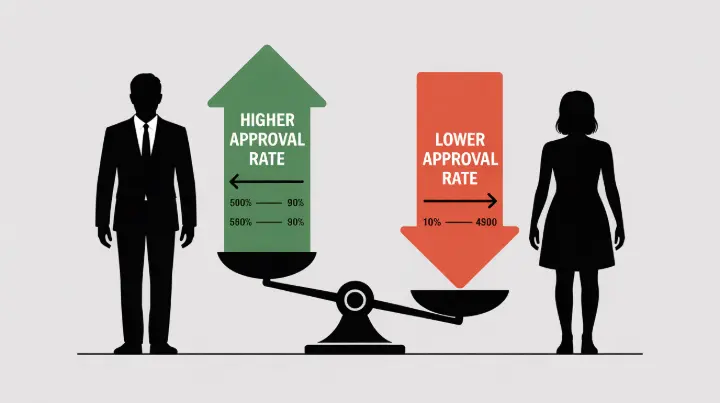

Studies show that women receive loans at significantly lower rates than men in fintech platforms that do not implement fairness measures.

Why Does Gender Bias Happen in AI Credit Systems

This section breaks down the technical and organizational reasons bias becomes embedded in lending algorithms, helping you understand root causes.

Gender bias in AI credit scoring is not accidental or inevitable. It emerges from specific decisions, data limitations, and organizational incentives. Understanding these root causes is essential for building fair systems that serve all populations.

Data Gaps and Proxy Variables Create Hidden Discrimination

Algorithms learn patterns from training data. If that data underrepresents women's financial behaviors, such as women with informal incomes or sparse transaction histories, the model learns incomplete or distorted patterns.

To fill gaps, algorithms may use proxy variables that correlate with gender without explicitly naming it.

Consider a hypothetical example: a model uses spending patterns at certain retailers, time of day for transactions, or frequency of small purchases. Individually, these seem neutral. But when combined, they may encode gender because shopping behaviors are often gendered.

The algorithm never "sees" gender in its features, yet it learns to predict it anyway. This is why even "gender-blind" models produce gendered outcomes. Researchers have found that proxy variables like shopping behavior, social media activity, and app usage can all serve as hidden gender indicators.

Design Choices and Organizational Priorities Affect Fairness Outcomes

Teams often assume that data is neutral and that bigger datasets automatically mean better predictions. In reality, data reflects the world as it was, not as it should be. When organizations prioritize scale, speed, and profit over fairness, they skip the harder work of testing whether outcomes are equitable across genders.

Some experts argue that algorithms run into trouble because financial institutions are legally prohibited from incorporating factors like ethnicity, gender, or race into their models. These prohibitions, intended to prevent discrimination, can block lenders from identifying and addressing bias. When fairness is not explicitly engineered into model design, it does not happen by default.

Lack of Gender-Disaggregated Evaluation Hides Disparities

Many teams evaluate their models on aggregate metrics: overall accuracy, approval rate, default rate. Without measuring outcomes separately by gender, age, geography, and other groups, harms go undetected.

A model might achieve 85 percent accuracy overall but perform differently for women than men, denying this disparity attention.

Building fair credit systems requires intentional measurement. Teams must ask: Do women and men have equal approval rates when controlling for financial behavior? Do they receive similar interest rates and loan amounts?

Do they experience similar approval speeds and denial reasons? Without this disaggregated analysis, bias remains invisible and perpetuates unfair lending practices.

How Biased Credit Scoring Affects Women and Vulnerable Groups Economically

This section explains the real-world consequences of bias in AI lending decisions for individuals and economies.

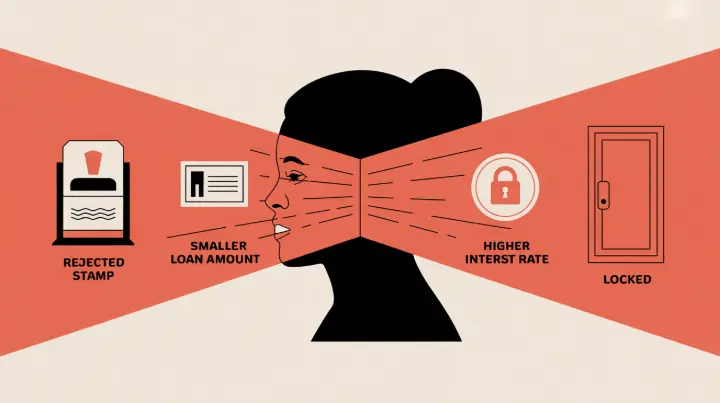

When AI systems embed gender bias into credit decisions, the consequences are practical and severe.

Women and marginalized groups may face fewer loans approved overall or conditional loans that come with stricter requirements than men in similar circumstances receive.

Smaller loan amounts limit business growth or personal financial flexibility. Higher interest rates and less favorable terms increase the cost of borrowing significantly over time.

Opaque rejections with generic reasons provide no actionable feedback, making it impossible to improve applications.

These individual harms aggregate into economic disadvantage. Entrepreneurs without access to affordable credit cannot scale businesses. Students cannot finance education.

Small farmers cannot invest in equipment or seeds. When half the population faces systematic barriers to credit, entire economies fail to realize their growth potential. Research indicates that gender gaps in credit access reduce GDP growth by 1 to 3 percent annually in developing economies.

The stakes are particularly high in developing economies where formal credit remains scarce.

Credit scoring has the potential to improve access to capital and financial inclusion around the world, but only if systems are designed to be fair. Reproducing gender inequality through AI would mean missing a historic opportunity to expand prosperity and create economic opportunity.

How to Fix Bias: Practical Approaches for Building Fair AI Credit Systems

This section provides concrete, actionable steps companies can take to build fairer lending algorithms that serve all populations equitably.

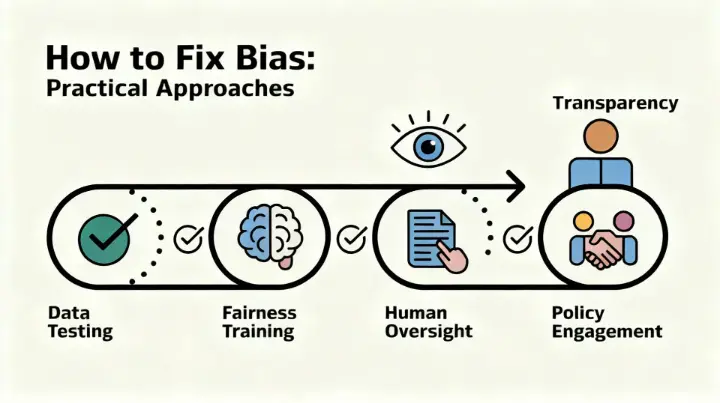

Building fair AI credit systems requires systematic action across data, modeling, transparency, governance, and policy. Here are the key practices that leading fintech companies are implementing today.

Collect and Test on Gender-Disaggregated Data Throughout Development

Start by understanding your data completely. Ensure your training dataset includes sufficient representation of women, low-income applicants, and other underserved groups. Measure model performance separately by gender, age, geography, and other protected characteristics. Calculate disparate impact statistics such as false positive and false negative rates by group.

A model might reject 2 percent of wealthy men and 8 percent of low-income women, creating a 4x disparate impact. These gaps become visible only with disaggregated testing.

Once identified, they can be addressed through retraining, reweighting, or other technical fixes. Companies should test at multiple stages: initial model development, before deployment, and continuously after launch.

Use Fairness-Aware Training and Robust Evaluation Metrics

Modern machine learning offers several fairness techniques that balance performance with equity. Preprocessing methods adjust training data to remove or reduce bias.

Constrained optimization techniques build fairness constraints directly into model training, trading off some accuracy for equity. Post-processing methods adjust model outputs to meet fairness targets.

Equally important is choosing the right fairness metric. Different fairness definitions can conflict with each other, so teams must decide: Do we want equal approval rates across groups, or similar false negative rates?

Do we want to equalize benefit or equalize risk? These choices reflect values and require stakeholder input from diverse perspectives.

Provide Clear, Specific Explanations When Denying Credit to Applicants

Creditors using complex algorithms including artificial intelligence must provide notices that disclose the specific, principal reasons for taking adverse actions. This is not just a legal requirement; it is essential for fairness and trust.

When an applicant is denied, they should receive a substantive reason: "Your debt-to-income ratio exceeded our threshold" or "Your savings relative to loan amount fell below our requirement," not vague statements like "failed credit check." Specific reasons allow applicants to improve and reapply, and they expose discrimination if it exists.

Implement Human Oversight, Appeals Processes, and Regular Monitoring

Algorithms should inform decisions, not replace human judgment entirely. Keep humans in the loop for borderline cases, appeals, and for monitoring model behavior over time. As lending behavior changes, customer demographics shift, and markets evolve, algorithm performance drifts. Regular human review catches problems early before they affect thousands of applicants.

Create a clear appeals process where denied applicants can request human review. This serves both fairness and accuracy: humans catch mistakes, and they can consider context that algorithms miss.

Forward-thinking companies allocate 5 to 10 percent of decisions to human review specifically to catch algorithmic errors.

Engage with Policy, Regulators, and Gender Experts From the Beginning

Build diverse teams that include data scientists, product managers, customer service representatives, and external experts on gender, financial inclusion, and fairness. Consult with regulators to understand their expectations. Engage with NGOs and community organizations serving underserved populations to understand real-world impacts.

External perspectives catch blind spots. A diverse team is more likely to ask important questions: How will this affect women entrepreneurs? What happens to applicants without formal employment? What if internet access is unreliable in some regions? These questions lead to better, fairer systems that work for everyone.

Regulatory Landscape: What Financial Institutions Must Do to Stay Compliant

This section explains regulatory expectations and what institutions must implement to avoid penalties and build trust.

Regulators worldwide are increasingly focused on algorithmic fairness in finance. In the United States, the Consumer Financial Protection Bureau has issued explicit guidance fundamentally changing lending standards and compliance requirements for all institutions using AI or advanced algorithms in credit decisions.

The key regulatory principles are clear: There is no "innovation exemption" to discrimination law. Advanced technology does not excuse legal violations. Automated systems and advanced technology are not an excuse for lawbreaking behavior.

Regulators are working together to make clear that the use of advanced technologies including artificial intelligence must be consistent with federal laws protecting against discrimination.

For international institutions, the European Union and United Kingdom financial authorities are similarly tightening requirements.

Boards are expected to take responsibility for AI use in banking. Regular audits for bias are becoming mandatory. Transparency reports on algorithmic fairness are increasingly standard in regulatory compliance frameworks.

The compliance landscape is clear: companies that build fairness into systems from the start face lower regulatory risk and build customer trust.

Companies that ignore bias face investigations, penalties, and reputational damage that can be devastating to their business and brand.