Agentic AI, autonomous or semi-autonomous systems that can make and act on decisions, is fast moving from lab experiments into production in finance. But like any powerful tool, timing and guardrails matter. Done well, agentic AI cuts manual toil, speeds decisions, and prevents losses.

Done poorly, it automates mistakes at scale. This post is a practical playbook: a quick readiness diagnostic, pilot ideas, governance must-haves, and the measurable KPIs you need to decide whether to run a pilot today.

What is Agentic AI and Why It Matters to Finance

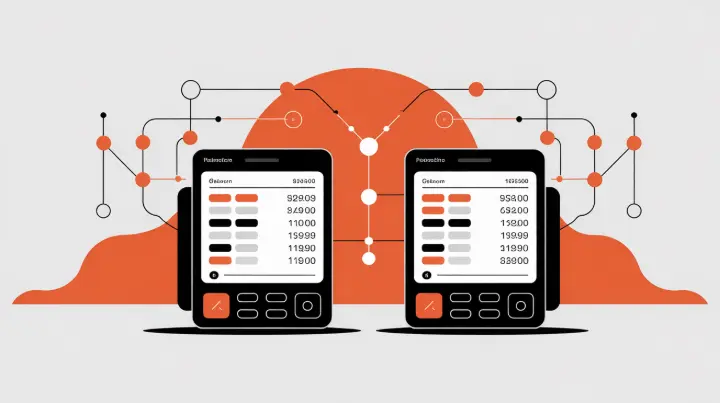

Think of agentic AI as a digital colleague that doesn't just analyze data or make recommendations, but actually takes action. Unlike traditional AI that simply flags a suspicious transaction, an agentic system can hold the payment, notify the right team, and start gathering supporting evidence - all in seconds.

For banks, fintechs, and asset managers dealing with thousands of daily decisions, this shift from "AI suggests" to "AI acts" represents a fundamental change in how operations scale.

The promise is compelling: faster fraud detection, instant loan pre-screening, automated reconciliation, and the ability to handle volume spikes without hiring sprees.

But the technology only works when your underlying infrastructure (data quality, integration capabilities, and governance frameworks) can support it. Rush in without those foundations, and you'll automate chaos instead of creating efficiency.

Why Finance is a Natural Fit for Agentic Systems

Financial services runs on decisions. Approve a loan, flag a trade, block a payment, reconcile an account. Many of those decisions are repetitive, rule-driven, and time-sensitive - exactly the things machines excel at.

Add rising fraud sophistication, tighter margins, and customer expectations for instant responses, and you have strong economic pressure to automate intelligently.

The challenge isn't whether automation makes sense, but whether your organization can deploy it safely.

Every financial decision carries regulatory weight, and every automated action creates an audit trail that compliance teams will scrutinize. The firms winning with agentic AI aren't necessarily the most technically advanced.

They're the ones who matched the technology to workflows with clean data, clear rules, and manageable regulatory constraints.

The Nine-Question Readiness Diagnostic

Before launching any pilot, run a quick internal assessment. Gather stakeholders from operations, compliance, IT, and risk management for a one-hour workshop. Score your organization on nine dimensions, using a zero-to-three scale for each:

- Do you have high-volume, repetitive decision workflows where real-time responses would cut cost or risk?

- Are there measurable manual bottlenecks slowing your team down?

- Is your data centralized and high-quality, or scattered across legacy systems?

- Do you have APIs and integration points ready, or would connecting systems require months of work?

- Do you have model governance frameworks in place, or the willingness to build them?

- Are your compliance and regulatory constraints manageable for the use cases you're considering?

- Does your engineering and operations team have the capacity to support deployment, monitoring, and rapid rollback if needed?

- Do you have a clear business case with concrete ROI metrics, not just vague efficiency hopes?

- Can you measure success objectively within weeks, not months?

Add up your scores. If you're hitting twenty to twenty-seven points, you're ready to pilot.

A score between twelve and nineteen means you should patch specific gaps first-maybe your data needs cleaning, or your compliance team needs education on model risk management.

Below twelve, pause and focus on foundational work around data quality and governance before attempting automation.

Smart Pilot Targets: High Value, Low Risk

The best first pilots share three traits: they're high-volume, they have clear success metrics, and they sit in lower-regulatory-risk zones where you can experiment safely. Fraud triage is a classic example.

An agent can propose holds for high-confidence cases while routing medium and low-confidence scenarios to human investigators. The ROI shows up quickly in reduced losses and freed investigator time, and you maintain human oversight where uncertainty exists.

Loan pre-screening offers another strong pilot opportunity. Agents can extract documents, fill missing fields, validate information against external databases, and route complete applications to underwriters.

This doesn't replace the underwriting decision - it accelerates the pipeline and improves conversion by reducing application abandonment.

Treasury reconciliation makes a third excellent target, where agents suggest fixes for mismatches and automate low-risk corrections, cutting manual reconciliation hours while flagging complex discrepancies for human review.

Running a Safe Experiment

A successful pilot follows a disciplined structure. Start in a true sandbox environment, isolated from production systems, with clear roles assigned: who owns the agent, who monitors it, who can shut it down.

Define your KPIs upfront - both primary metrics like dollars saved or time reduced, and secondary metrics like false positive rates and human override frequency.

Build in human-in-the-loop checkpoints for any high-impact decisions, and create an operator-facing kill switch that doesn't require engineering intervention to activate.

Plan for a four-to-eight-week initial run, then move to a canary rollout where the agent handles a small percentage of real traffic while you monitor behavior closely. Instrument everything: log every decision, every override, every edge case.

These logs become your evidence base for both scaling decisions and regulatory conversations.

Governance, Risk, and Regulatory Reality

Regulators expect explainability, audit logs, and human oversight for decisions that impact customers or firm risk.

Your agentic AI implementation needs explicit model risk controls including version tracking, drift detection, and performance monitoring. Set role-based permissions that limit what agents can do financially - maybe they can propose but not execute transactions above certain thresholds.

Test adversarial scenarios: how does your agent behave with malformed inputs, missing data, or coordinated attack patterns? These aren't theoretical concerns; they're the questions your internal audit and regulators will ask.

Measuring Success and Making the Go/No-Go Call

Define your primary KPI first, whether that's prevented fraud dollars, decision time reduction, FTE hours saved, or conversion lift. Track secondary metrics like false positive rates, human override frequency, percentage of actions fully automated, and incidents requiring manual rollback.

Look for statistical significance, not just directional improvement, and set clear thresholds: if human override rates exceed a certain percentage, or if compliance raises specific concerns, you have predefined rollback criteria.

When Not to Implement Yet

Some warning signs should pause your plans. If you're considering automation for core decisions where explainability is legally required but technically unavailable, stop. If your data is siloed across systems with no integration plan, or if it's stale and unreliable, fix that first.

If you lack monitoring infrastructure to detect when agents drift or fail, or if compliance and legal teams haven't bought into the approach, you'll create more problems than you solve.

Your Next Steps

Agentic AI is not magic—it's leverage. When the underlying plumbing is solid, agents turn repetitive decisions into scale advantages. When those foundations are missing, agents magnify problems. Start by running that one-hour scoring workshop with your cross-functional team.

If you score high, identify your pilot use case and secure a small budget. If you score low, create a ninety-day roadmap to address your biggest gaps. Either way, you'll know within weeks whether you're ready to pilot or need to build foundations first. The firms that move thoughtfully now will have operational advantages that compound for years.